Daniel L. Johnson, MD

(C) Copyright 2003

All rights reserved

Free and Open Source is bringing a revolution in Information Technology practices and management, and has offered a new paradigm for effective development. This revolution in development brings with it the opportunity for two other changes:

- A paradigm for enterprise IT management, and

- A new business paradigm for IT, the professional services model. That is, one based on individual mastery of a common base of technology and knowledge, with commoditized access, analogous to medicine, accounting, and law.

This has far-reaching implications for vendors, clients, and for programmers. It is a revolution. It is a revolution because of the power of publicly collaborative software development. This is something that the healthcare industry very much needs, if medical records are ever to be easily portable, something that is absolutely necessary for safe patient care. (See the story of Mary McLain to understand why.)

I. Introduction A. Consequences B. Definitions C. Opposing Basic Values D. The IT Business Triangle II. The Open Source Paradigm for Management A. The Professional Model B. Software Libre changes the Control Hierarchy C. Professionalism Requires Open Knowledge III. The Economics of Knowledge A. The Business History of IT, Too Briefly B, Commoditization and Net IT Investment C. A History of Intellectual Property D, Subtypes of Intellectual Property E. Town versus Gown F. Effects of Lock-in G. The Effects of Freedom H. What is the Business Model? IV. Open Source that Works V. Evolution of the Profession VI. Areas Requiring Open Source A. Education B. Health Care C. Government D. Military VII. Health Care: An Evolving Crisis A. Mary McLain Gets Caught in the Medical Thicket B. An Incomplete Medical Record is Preferred Appendix A: Open Source Healthcare Software Projects Appendix B: Misconceptions about Open Source (pending)

The growth and strength of software developed in the open derive from two features:

1) A set of practices -- A particular type of collaborative framework and leadership styles that have been suitable for distributed development; and

2) A set of conditions which create the environment in which this development can occur: restrictive licenses that keep the code open and communications infrastructure that keep it available.

Collaboration among users and programmers produces the highest quality software and the fastest possible functional maturation and evolution, and

Freedom means that no firm or individual can exploitively control the product. This makes open software of compelling interest to governments (who, ultimately, can't tolerate being controlled by foreign powers or their clients) and to users in general, because of the long history of financial exploitation of clients by proprietary software firms.

Open Source does two important things:

It frees client firms from the vendor lock that has controlled their IT systems and their capital investment, and

it frees programmers from intellectual exploitation by proprietary IT enterprises.

By doing these two things, an environment is created in which a professional services business model can grow and flourish.

In the long run, this will allow the finest programmers to work in a professional service role analogous to physicians and attorneys and other professions, in which highly trained experts compete on the basis of mastery of and skill in using a common base of knowledge. I don't mean to imply that this model will replace the "captive-intellectual" model, but that it will likely characterize an important set of elite programmers.

The professional model implies that in the future the most successful IT firms will base their business model on service rather than product, although the inherent complexity of integrated systems will mean that "product" will continue to be very important, in distinction to the traditional professions, which do not have the same type of mechanistic technical base.

Lou Gerstner's understanding that the model for IT is service was the key to bringing IBM back from the brink of bankruptcy in the 1990's. He understood that clients believe they are purchasing functionality; the client is not particularly interested in the hardware itself, as without function it's just an expensive box. Clients are interested in service because this is what keeps their IT side -- and their companies -- functioning.

IT companies are discovering that GNU/Linux saves R & D costs, allowing them to focus on functionality and service. Cray has announced a new high-performance clustered system. What is its operating system? GNU/Linux. Why? Its quality and the ease of adapting it to a new machine rather than adapting their own UNICOS. In addition, by using GNU/Linux, they are making their machine immediately available to every vendor currently running applications on UNIX or Linux with only modest software modifications.

Before I go further, let me make it easy for myself. I am going to be deliberately imprecise, and simply use "Open Source" as an all-inclusive term for Free, Liberated, and (merely) Open software. We can discuss, sometime, the interesting distinctions among these terms, but for the purposes of keeping the keystrokes down as I write, and in order to be easily understood, I'm going to use "Open" in the all-inclusive sense that it's acquired. (If you want to understand the distinctions better, go to GNU.org and OpenSource.org.) I'll use lower case "open source" when I wish to emphasize its generics and upper case "Open Source" when emphasizing it as a movement. Furthermore, when I use the term, "Linux," I am doing so only to save keystrokes: the technically accurate term is "GNU/Linux," as explained by Richard Stallman, who wouldn't mind being invited to his own party.. Linux the operating system is useless without the GNU toolset. (And to give credit properly, I am writing this using GNU Emacs on a Red Hat 8.0 GNU/Linux system and checking the html formatting with OpenOffice and the Galeon browser.)

It is not the freedom that is the "magic," it's not the GPL. Published code is essential to public collaboration; the GPL and other licenses are needed to protect its openness and to prevent piracy (by which I mean putting GPL'd code into proprietary work and not releasing the derived work). But if there's any magic, it's the magic of organization and hard work that characterizes the Free Software movement and its foster child, Open Source. Eric Raymond and Rob Landley have summarized this phenomenon very nicely in their response to the SCO-IBM complaint.

Open source software and its development is a revolutionary new way of managing IT projects that is changing the way IT managers of client firms should manage their own systems and their relationships with vendors. One way to fully understand this revolution is to first read Fred Brooks' The Mythical Man-Month to understand the best practices of single-site software development, and then get acquainted with the process of open development by getting into one of the projects.

Open Source is characterized by the release of code (politically "intellectual product") -- to users at least, and to the public characteristically. The Internet has made possible fast publication and has permitted collaboration of programmers and users who are widely scattered. The result has been sophisticated and effective procedures for distributed development.

My claim that open source is a new IT management paradigm raises some obvious questions about what I mean: Perhaps the answers are so self-evident as to require no explanation, but I'll say what I mean so that we can disagree intelligently, in case you wish to use different definitions or assumptions.

- What, in this context, is meant by management?

- What is new?

- What do we mean by paradigm?

- What, really, is open source?

- What is the new paradigm?

- Why does it exist?

- What might be its consequences?

- What opposing or counterbalancing influences exist?

To manage, in this essay, is to control an enterprise, by discretionary authority and influence. To IT managers, the open source movement is foremost a management revolution: it provides a best-practices paradigm for distributed development and it offers a means to escape vendor lock-in.

It does not involve completely new principles or values; what is new is the application of traditional values regarding knowledge and a rejection of financial gain as the sole reward or royalty for effort.

A paradigm is simply a model that is exemplary (something worth imitating) that provides a solution to a challenge.

Open Source has acquired two meanings.

- In the vernacular, it's inclusively to refer to conditions or licenses by which source code is released into the public view.

- The Open Source Initiative uses the term to encompass types of licensing terms under which code is published on terms broader than the GPL, as well as under the GPL (owned by the Free Software Foundation). This revolution began with the Free Software Foundation and the General Public License, but the GPL is not equally satisfactory for all uses, and "Free" software has been specifically associated with the FSF and the GPL; in addition, "free" in English is ambiguous. The best use of "free" in regard to software is not gratis, but libre. Alternative inclusive terms are FOSS (Free and Open Source Software), which tries not to transgress on the territory staked out by OSI, and, in Europe, the phrase Free/Libre and Open Software has gained currency, because accurately gives meaning to "free," never mind the allusion to dental hygiene.

I dislike contrived language; so I will use lower case (open source) when using the vernacular, generic sense and upper case (Open Source) when referring to software that probably fits the OSI's Ten Requirements. (And since I wrote this sentence after writing the essay, and am not a perfect proofreader, I must ask you, dear reader, to forgive inconsistencies or to email snippets to me so that I can correct them.)

The new paradigm is an evolved system of best practices that is used to develop projects in the open. Fundamentally, it requires the following:

1: Connectedness - the world wide web, for development libre.

2: A project (ahem...) That is, something that puts code to work

3: Accessible coding practices (e.g., self-documenting, modular).

4: Concurrent documentation (e.g., CVS)

5: A Forum for "discussion" (debate).

6: Respected leadership.

7: Users (testing, functionality needs, feature requests).

8: Productization: packaging for installation & maintenance.

This new paradigm exists because of social and economic pressures that have frustrated other avenues of progress.

The consequences of its existence is subversion of the proprietary closed model of business IT development that treats knowledge as product, and its evolution into a service model of IT development staffed and guided by professionals.

It will be opposed by those who do not understand or are threatened by its development. It will be weakened to the extent that businesses are able to make proprietary standards and protocols dominant. The tools used to oppose it will be intellectual property law (read Lawrence Lessig's books, Code and The Future of Ideas) and marketing guile (you don't need a reference; you've seen ads).

The proprietary software model and the software libre model are diametrically opposed on one value: whether ideas should be shared for community benefit or kept secret for individual exploitation.

This difference is not entirely incompatible, however. The Free Software Foundation would argue that all knowledge should be open, but its a reality that in commercial enterprise of all kinds, knowing something special is often a key to competitive success. The secret is an intrinsic part of capitalism. (Yes, I know that microeconomic theory presupposes open knowledge, but as every farmer knows, true commoditization is the road to poverty. The key to success in real-world entrepreneurial capitalism is in having something special, and secrets are best, as every competitor knows.)

Capitalism is aimed at creating Riches. Secrets are valued. But each enterprise fights alone. The lottery mentality of new ventures hides the fact of frequent failure. Most new startups fail. The capitalist idealistically considers exploitation the means by which a profit is made from utilization of resources; this is not inherently unjust. Control of resources, labor, markets, or clients brings a sense of security to the capitalist. Lest this seem harsh, it is capitalism's strengths that feed and clothe this society. This is the paradigm of "Town." (See below.)

Civilization has brought us more than organized capitalism, it has brought organized learning, academia. (In fact, it's academia that has understood and refined capitalism.) But the wealth of academia is not profitability, it's Knowledge. In academia, teaching is an end, learning a goal. The values of academia are that knowledge should be spread, should be widely distributed for its community benefits. Publication a means of distributing knowledge and preserving it for successive generations. Resources are something to share, not to reserve privately. This is the paradigm of "gown." More on this later.

Software libre arose from the academic world, not from the business world. And so, although neither participants nor observers of the scene discuss it much, the open source revolution arises from different values than those which drive entrepreneurial capitalism. The values are implicit in the choices of action taken by their adherents.

These presuppositional values differ in that "business" places highest priority on "creating wealth." Their only conscious goal is wealth for the owner. Wealth for society at large is assumed to occur as an inevitable natural consequence of idiocentric market actions (e.g., as stated in the Fundamental Theorem of Welfare Economics). I'll not argue here whether these assumptions are supportable; if you're interested in pursuing this, read Dr. Walter Schultz's The Moral Conditions of Economic Efficiency, Cambridge Univ. Press, 2001. ("Moral" refers to social norms, not to any religious rules.) Dr. Schultz formally and rigorously proves the Fundamental Theorem wrong, especially its claim that idealized economic agents acting solely in their own interest are sufficient to create an orderly market. He then proves that it is necessary to have a set of behavioral (social) norms in order for efficient markets to exist.

The tension between proprietary software and free software is the tension between community and the individual; it is also the tension between the claim that there are (or need to be) no rules beyond market forces, and the idea that the market "agents" must act within basic social norms, in order to have orderly and efficient markets.

When Schultz proves that normative constraints (community-based behavioral rules) are necessary in the market, he lays a foundation for the business case for free/libre and open software, as a preferred alternative to proprietary software.

The values of "free source" or "source libre" are that knowledge should be publicly available. This is the tradition of the university, of the pursuit of knowledge for the benefit of mankind and the love of teaching. Its business model is the expert consultant or professional service provider, who is not captive to any firm.

The term "open source" has come to describe a middle ground. The ambiguity of English confounds "gratis" and "libre." This is confusing not just because "Free Source" software is normally gratis on the Internet, but also because the GNU Public License prohibits royalties. This confusion is exacerbated by the pre-existence of "freeware" in the Apple and PC worlds.

But beyond the ambiguity, there is also a need for Free Source licenses different from the GPL, licenses compatible with publishing source but that provide different rights to the user. Some licenses are more and others less restrictive than the GPL. "Open source" has evolved as a term to describe every situation in which source code is published, including those published under terms different from those of the GPL.

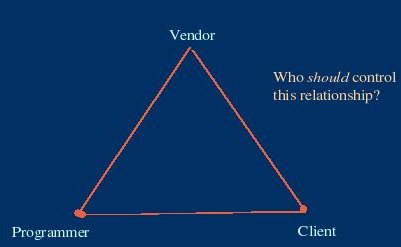

IT development and maintenance involve 3 participants:

- Programmers (Individuals),

- Vendors (firms), and

- Clients (firms.)

The currently dominant model of business development depends on intellectual property law that criminalizes release or unauthorized use of code. Vendors use the prohibitions of intellectual copyright law to control programmers by requiring them to permanently assign to their employer all rights of the code they produce, and to prohibit the client firm from even having access to the code on which its business depends.

This system of "intellectual property rights" can be rationally defended. This defense rests on assumptions that include placing a higher priority on individual (or corporate) gain than on community benefit: from this point of view, essentially no community benefit is considered a priori sufficient to require providing public access to the work.

The capitalistic model for investment involves a lottery mentality: most startups -- indeed, most firms- - fail sooner or later. The goal of venture capitalists is short-term riches through transient market dominance, not long-term wealth, and the procedures and priorities of the capital markets reflect this.

The net result of intellectual property law is to give software vendor firms complete control:

- control of programmers through contractually demanding permanent ownership of all their output in return for employee wages for the modest return of a job that is not a sinecure.

- control of clients through prohibiting access to software, restricting its use severely, and maintaining complete control over schedules for development, maintenance, upgrades, and termination of support.

This situation becomes uncomfortable when vendor control is exercised in disregard of the client firm's needs for stability and performance, and its capital investment calendar.

The control that vendors have over programmers is uncomfortable when it becomes unfairly exploitive of their needs for security and for appropriate reward.

Control of "intellectual property" is through copyright, patent, and trade secret. Although trade secret action has been attempted, this is unlikely to succeed against open source adopters, for the simple reason that nothing that's in the open can be considered a trade secret -- unless it was stolen or maliciously released, but then the innocent user is protected; it's the thief who is liable. (From a cogent review of trade secret law by Kent Avery, Karen Bedingfield, Elaine Cheung, Nicole Cobb, and Tatiana Connolly, students of The University of Connecticut School of Law.)

The genius of the GPL is that it turns intellectual property law on its head by using it to enforce distribution. The royalty which may be gained is the collective contributions of participants in collaborative development, the worth of which, in an active project, far exceeds the royalty income the author could have expected.

Management is the exercise of discretionary control or authority over an activity. Its key requirement is possessing control or leadership authority. Possessing control permits rapid adaptation and response to changing conditions, and efficient exploitation of resource and personnel (exploitation may be just or unjust). Being controlled prevents free use of managerial discretion and timing, and threatens the prosperity and stability of a business. For this reason managers hate being controlled. And of course vendor lock-in entails control of the client by the vendor.

Management, to be effective, thus always needs some form of control over the goal-directed activities of a group. The traditional model of IT business management exploits intellectual property law to rigidly control both the programmer and the client, with the goal of generating high profits for the vendor's owners. This control also creates "vendor lock," which increases business stability for the vendor and decreases it for the client..

The client also values control, as it permits rapid response to changing business conditions and makes budgeting and market planning easier. To the client, purchased IT services are typically a small fragment of the business, and having an expensive and rigid system may be balanced by its usefulness for analysis or productivity.

Being controlled becomes uncomfortable for the client if the fees paid for IT much exceed the productivity gains derived from its functionality, if it cannot be modified quickly in response to sea change in its business, or if the IT system is unilaterally changed by the vendor. The product may be "upgraded" unilaterally, removing important functionality, it may be "sunsetted," requiring unplanned software conversion, or the vendor firm may go out of business or acquired by another firm and the software simply replaced.

Often forgotten by observers of the IT industry is that this control may be uncomfortable for the programmer, who, although the author of code, is prohibited from having access to it or profiting from it, if dismissed or if the company fails.

Fundamentally, software libre is for the programmer freedom to code. Without it, a programmer must work for a large corporation to participate in significant work. Because of software libre, any programmer is free to work on any part of any open project, consistent with personal interest and ability. This is a tremendous reward, especially for those who have labored in the dungeons of large corporations.

As people in general hate being controlled or exploited, control motivates them to promote change.

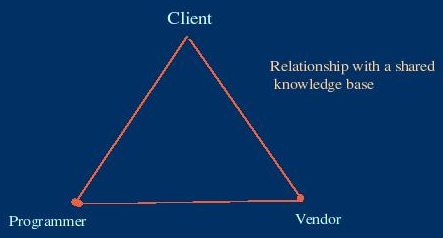

As I said at the top, the emerging business paradigm for Information Technology management is a professional services model based on individual mastery of a common knowledge base analogous to medicine & law. This has far-reaching implications for vendors, clients, and for programmers

The Free Software Foundation and the GPL were a direct response to the unilateral control exercised by vendors. The GPL specifically requires only that code be available to clients, but Richard Stallman's writings are clear that the underlying philosophy is to freely publish code. This liberates the code, but more significantly liberates both the programmer and the client from unfair exploitation by the vendor.

Code libre becomes a body of public knowledge, which can be used by anyone with requisite training and expertise. This is exactly comparable to law and medicine. Both professions thrive, not because practitioners have secret, proprietary knowledge, but by providing clients with expert service based on publicly available knowledge.

In this model, prosperity is derived from mastery of a difficult body of knowledge, not from restrictive or private ownership of it. Providers compete on quality and customer satisfaction, which is based in skill and good judgment.

The paradigm of public knowledge and expert service fits any situation in which the knowledge benefits the public broadly, either by being universally applicable (law) or by being unpredictably needed by any one person (medicine).

IT has become a broad public need by its success in becoming a tool of expression, analysis, communication, and entertainment. The personal computer has become a cultural necessity both at home and in business.

Its ubiquity has made IT seem to be a commodity, and therefore users expect commodity pricing and availability. The Free Software model, which assigns use broadly, fits this sense of public need. As a side effect, it creates a commons of public knowledge . Because mastery of software requires extensive training, there emerges a class of experts, who as professionals provide service to users.

When software is freed, vendors no longer have absolute control of programmers and clients, mitigating their ability to exploit both. They may feel threatened by this because their business model has been built on the expectation of control ("lock-in"). But they still have tremendous power, the power of the well-run, soundly financed organization to coordinate effort, to market itself, and to provide contractual reassurance of continued service. This is the model of the new IBM.

Open source frees everyone in the triad of user/client, vendor, programmer.

The last to realize the net benefits will be the vendor who has captured dominance of a market. Those who have been successful with the old paradigm are always the last to recognize, understand, and adopt the new one.

The benefit to vendors generally is that they are freed from the pyramidal competition for market dominance that proprietary software creates, and from the lottery mentality that this generates. In this competition, most firms fail. The alternative is the potentially less lucrative but more stable service market, in which security is found in customer loyalty through quality and responsiveness, rather than the tenuous security of being the king everyone tries to dethrone.

The venture capitalist expects a lottery mentality, a "winner-take-all" competition, in which riches are sought (rather than wealth, which is a different thing). This mentality prefers the exclusive "product" market, which is easier to understand and to dominate, to the inclusive "service" market, which depends on customer relations and actual competence.

A shared knowledge base is incompatible with the "productized" model of proprietary "intellectual property" used in traditional IT business management models, but is essential for a professional business model. This transforms competition: instead of competing for proprietary knowledge, people compete for mastery of and discretion in applying common knowledge. This is the essence of "professionalism."

"Professional" implies acquired expertise, independence of judgment and action, and social Recognition. The independence and social recognition lead to changes in styles of personnel recruitment and management.

Generations lived under copyright and patent law before someone realized that these legal restrictions permitted ideas to be bought and sold like hard goods and coined the phrase "intellectual property." In recent decades this concept has become part of our culture to the extent that many people actually believe that ideas are property. But ideas are not tangible; their expression may be.

Ideas are not "real" in the same sense that money is not "real." Most of our "wealth" is preserved in the orientation of magnetic dipoles within thin films or iron oxide coatings within complex machines. Ancient native Americans, whose proxy of wealth was scarce beads, could at least touch their fiat money.

Both money and knowledge in our society exist as entities only because there are consistent rules by which we handle their expression. And both money and ideas must circulate in order to be maximally effective. Practices that hinder the appropriate exchange of either, are detrimental to society at large.

To lock up intellectual product prevents it from becoming part of the common knowledge base that creates a professional services industry. Thus, to the extent that IT responds to a public need, it will inevitably become a public right, through one or another mechanism.

Knowledge as wealth follows its own economic laws. The economy of money is one of contrived and regulated scarcity. The economy of knowledge is one of enrichment through availability. That is, the fundamental, distinguishing characteristic of the knowledge economy is that it enriches minds only when it is widely shared -- made publicly available (published and publicly stored in bookstores, libraries, and now, on the Internet from servers).

Once upon a time, there was the relay... Much technological progress came from scientific application of academic research. It took a few decades before academia (which had pretty much ignored patent rights) realized that its graduates' ideas, which may have begun in its labs, were making tons of money in private industry. Only in the late 1950's and mid 1960's was there a rush among academic centers to keep and license patents that were commercially viable. (For a competent and detailed history of software innovation, see David Wheeler's long essay.)

IT progress began in the universities, but proprietary development quickly began. At first, computers were used for obvious tasks such as inventory control, accounting,and management information. The first companies built their own hardware, wrote their own operating systems, and application software. This integrated hardware and software, they eventually discovered, created a barrier to the client who might wish to find a new vendor, and "Lock-in" was born (or, more accurately, recognized).

Companies adopting IT did so in ways that dramatically improved productivity, and vendors naturally felt that a significant chunk of that gain belonged to them, a just reward for the miracle they'd wrought. The expense of manufacturing and development of the first vertically-integrated systems and the tremendous productivity gains first required and then justified high fees. And vendors became rich, and lived high. And lo, competitors envied and clients sometimes chafed.

Many companies that prospered in the first couple of decades have passed away. Sperry, Univac, and Burroughs are all history. These became Unisys, which has all but vanished, like Control Data. (There's a list of vanished names from the 40's and 50's available to satisfy your curiosity, and Minnesota has some interesting connections to this history.) In any case, a single corporation soon established complete market dominance -- IBM.

These companies were all faithful to the model of vertical integration, proprietary systems, and the lock-in model; competition was mainly to achieve vendor lock. Eventually, by the 1970's, the technology and the systems were well established, and hardware became almost a commodity -- "almost," because prices were still very high and profit margins of successful companies were enormous.

Beginning in 1969, Ken Thompson, Dennis Richie, M. D. McIlroy, and J. F. Ossannaand collaborated at Bell Labs to write a portable operating system, UNIX, and because AT&T was prohibited from entering the IT marketplace, it was freely offered to universities - until 1984, when AT&T was permitted to enter the computer business and took back UNIX, charging high fees for what had been gratis.

This had the beneficial side effect of annoying Richard Stallman, who turned copyright law on its own head with the GPL, and who began writing valuable software. (This is a gross oversimplification of the process. Read Stallman's own account of this if you want the truth.)

Wozniak and Jobs built an open-architecture computer with commodity pricing,and demonstrated the potential for public use of personal computers.

IBM, who owned the computer world then, was envious. IBM shot itself in the knee by producing the open-architecture PC, but guaranteed thereby the dissemination of personal computers.

Microsoft offered a closed, proprietary OS and (later) software at commodity prices. People discovered word processing, spreadsheets, financial management software, and games - and thereby discovered a universal "need" for home IT. Microsoft used this felt need to dominate and then monopolize the PC software industry.

The Internet was created, and the http protocol, Apache, the URL, and sendmail were added to it; and the Web came into existence. This is crucial for two reasons: First, it greatly expanded the sense of public "need." Second, it demanded cross-platform performance. For my story, the crucial importance of the Internet is that it instantly supported the creation of "knowledge wealth." Academics recognized this, and promptly embraced it. It's essentially an interesting aside that the business community did not instantly begin creating financial wealth from it, except for pornographers, who benefited from its unregulated and private character. (I was told recently that about half of all Internet bandwidth is used in the exchange of pornography.)

The result of the public availability of the Internet was further commoditization of software. Any time there is a broad public "need," commoditization will occur. In IT there have been two enormous pressures toward commoditization: on the business side, there is the restrictiveness to management that vendor lock entails; on the home side, there is the stranglehold that Microsoft owns on the home computer.

Right now, there is great pressure toward commoditization among businesses, as in the post-bubble economy many firms feel they cannot afford the high fees of traditional IT vendors.

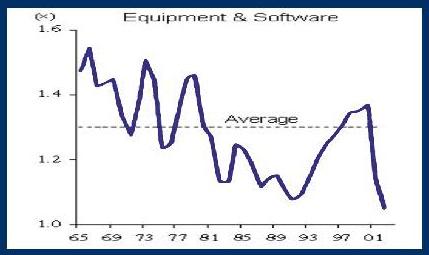

IT net capital investment over 40 years (X = expenditures / depreciation)

Source: Bureau of Economic Analysis (), 2002

This graph demonstrates the progress of the gradual commoditization of IT, for as the power of silicon has grown exponentially, and computers have penetrated American society ubiquitously, the net capital investment in IT has drifted fitfully downward. The short-term economic phenomena are also clearly visible. You'll notice from this graph that

- Economic downturns clearly affect investment most strongly in the short term;

- Economic expansion is accompanied by increased IT capital investment.

Hence, IT capital investment is "discretionary" in the sense that management uses prosperity to fund efficiency gains.

But

- The microcomputer revolution in the '90's was a time of tremendous efficiency gains.

- The Internet boom is clearly visible;

- The general trend is toward lower current capital cost relative to past expense.

A key to commercial success is to have something that no competitor has: raw material, a patented item or process, a special knowledge of markets, or "Intellectual property."

On the other hand, a key to cultural advancement is to share freely new discoveries. These seem to be diametrical needs. They are the needs of The Town and The Gown, based, as we've seen, on different values.

There was no intellectual property law until the industrial revolution brought mass production to printing. In the 19th century, mass printing made copying relatively easy, and "copyright" was an important restriction to preserve the financial rights of publishers and the integrity of the manuscript. At times overly restrictive, at least in Britain, a balancing concept of "fair use" evolved.

Interchangeable parts made patent law important, to ensure that the inventor who'd invested in research and development could recoup this investment before imitation was permitted. Patent durations have not increased significantly.

In the 1950's, beginning with computers, technological innovations exploded out of universities, which soon began to be interested in the income potential of inventions born in their institutions.

In the last quarter of the 20th century, the profitability of branding began to be understood well, which led to the general use of the new phrase, "intellectual property."

This has increased tension between "gown," which requires free availability of knowledge to function properly, and "town," which requires restrictions in order to enhance profitability.

In fact, knowledge is not physical "property" at all. What is property is the form in which that knowledge is expressed. The term, "intellectual property," obfuscates the fact that ideas, like money, are not real. Both have value because of their scarcity, and both are expressed in various forms. Both are expressed on paper, on metal, in film, and in various forms of iron oxide coatings. Both ideas and money exist today predominantly as altered magnetic dipoles within exotic and complex machines. These have more permanence that the ice on a winter lake only because energy is continually expended in maintaining their permanence.

Trade Secret law has recently been used to threaten software authors. I judge that the main threat is the inconvenience of having to defend rather than actual loss, as reverse engineering is specifically permitted under standard trade secret law.

There are several different types of intellectual property, which differ functionally in commerce, although intellectual property law does not differentiate them. The distinctions here are somewhat arbitrary:

1: entertainment products and performances

2: cultural commercial icons (Logos, trademarks, identities such as Mickey Mouse)

3: informative writing

4: instructional materials

5: expressive writing (fiction, poetry)

Of these, cultural commercial icons warrant indefinite protection, as is happening under recent revisions of law. They are of great commercial value, and the Knowledge Economy would gain nothing from their entrance into the public domain. On the other hand, instructional materials and informative writing is very important to the Knowledge Economy, and need to be publicly available. As long as such works are commercially viable, they warrant copyright protection in order to guarantee their widespread availability. But when they become commercially non-viable, they still have cultural importance, and should then enter the public domain. Expressive writing and entertainment products have highly variable commercial longevity, and as a result, most of these are lost to the culture as soon as their publication is no longer profitable.

An answer to this tension is to make duration of copyright related to continued commercial use and availability. A work should remain available to the knowledge economy. If it becomes commercially unavailable, publication rights should revert to the public domain after a period of absence from the market. An author or publisher should lose their exclusive right of publication after a period of commercial neglect. But modest royalties should be owed to the copyright owner by anyone exploiting it commercially.

This is simply a proposal to follow the model already created by the music performance industry. BMI (Broadcast Music, Inc.) & ASCAP (American Society of Composers, Authors & Publishers) are the licensing organizations which were founded so that the creators of music would be paid for the public performances of their recorded works. Any user of music who plays copyrighted musical works in public, and whose performances are not specifically exempt under the law, needs a license from BMI/ASCAP or the members whose works the user wishes to perform.

This model could be extended to the re-issuing of commercially dead written works. If there is any commercial benefit to re-publication, even indirect, then the copyright owner is owed a reasonable royalty. Is this model perfect? No. Is it functional? Yes. And it provides a means of fairly keeping old material culturally alive.

|

Gown |

Town |

|---|---|

|

Values: |

Values: |

|

Results: |

Results: |

The ancient conflict between town and gown is both more complex and less well defined than I've indicated in this table. This table has light-hearted and serious intent. It's facetious in that it's simplistic: these disparate values can't be summarized succinctly and precisely. Nor is the parallel disparity between proprietary and free use of software code perfect. Yet the differences in values do exist, they are instructive, and the Town-Gown conflict reflects as accurately as any available metaphor the differences in values and priorities between the communal interest of free and open software and strictly proprietary interests.

The point is that our values create priorities that influence our reasoning and drive our choices. They also determine what we regard as ethical, so that they determine who we respect and how we write laws and regulations.

At the same time, these opposing values are not exclusive: we can value knowledge and its benefits to society generally while we all understand the need to survive economically, to pay the bills and feed the kids and save for retirement. This need to survive and the ambition to thrive will always be stronger than ideals about shared knowledge until we feel secure economically. Parenthetically, a reason to form academic institutions is to create a measure of economic security so that knowledge can be pursued undistracted by the need to survive. And a defect, with regard to community values, of the capitalist system as it's evolved in our society is the doctrine that "ideal" competition requires continuous economic insecurity.

These tensions in the US have resulted in laws that strongly favor proprietary knowledge, to the great frustration of librarians and academicians. Still, when shared knowledge unequivocally benefits society, it must be given its due. For example, open source and open standards have demonstrated the power of shared knowledge in maintaining communications and information exchange on the Internet, and there are many other arenas of communal need in which openly shared software infrastructure will create powerful and efficient systems and tools, particularly in healthcare, where years of discussion and standards creation have gotten nowhere, in the sense that a patient's clinical record cannot readily follow the patient from one specialist or institution to another during the process of care, except in hard copy.

Part of the tension between proprietary and communal interests is the idea of exploitation that is intrinsic to capitalism. In the jargon of commerce, a resource is successfully and justly exploited if an entrepreneur is able to use it to produce goods or services, adding value in doing so, and capturing this gain. In this use, exploitation is a favorable goal.

In the jargon of sociology and politics, the idea of exploitation denotes injustice. It implies that the advantage one person enjoys over another is used manipulatively and unjustly to keep the exploited person at a social or economic disadvantage.

When the resources controlled and exploited by the businessman are people, then the tension between these two senses of exploit tips in favor of the pejorative sense. It is social and financial exploitation of programmers' skills and clients' needs that has driven much of the open source revolution.

Free Software is Gown, IBM, Microsoft are Town

Though the values, priorities, and goals of the Knowledge Economy and the Proprietary Economy differ, both are needed: we must eat and learn. "Open Source" paradigms are ways to meld the values and benefits of "gown" with the need for income and financial stability of "town."

The key tension between free and proprietary software is that the "Intellectual Property" concept destroys the viability of "gown." "Gown" prefers freedom; but totally free knowledge terrifies "town." "Town" prefers "lock-in."

The extreme position of the Free Software Foundation is necessary, as by defining one clear boundary it creates room for less doctrinaire positions, and understanding its philosophical roots aids us to better understand open software in general.

Likewise, the extreme position of those who would make all intellectual expression closed, who would make it the slave of profitability, energizes those who understand the importance of shared knowledge for social and cultural progress to seek a middle ground.

A middle ground is needed, for (despite the protests of the fsf), some restriction of intellectual expression is necessary of behalf of those who must sell it to eat; while on the other hand, as Lawrence Lessig has made clear, society needs liberal "fair use" of ideas in order to advance culturally and intellectually.

Highly restrictive intellectual property laws permit unfair exploitation, enriching some unjustly and leaving others aside unjustly. Right now the laws very strongly favor restriction and inhibit fair use. This makes open licenses essential to the functioning of an open source community. Without them, we would see open ideas quickly pirated.

Programmers are locked in just as strongly as clients: Employment contracts typically assign all ownership rights of the employee's intellectual production to the employer, sometimes even that produced outside of work, unrelated to the business. There is no assurance of royalties, not even of long-term employment. Open Source makes this pointless. It opens this programmers' jail cell, in that there is no reason to insist on ownership of code that will be released anyway.

Clients are not only locked in, but helpless: The client does not have access to the code that runs its business, so it is at the mercy of the vendor, regarding-

Bug fixes, revisions, and upgrades;

System functionality;

Capital improvements; and is helpless against

Abandonment and sunsetting.

Open Source mitigates this.

Client firms face potentially catastrophic expenses (or losses) because, when a vendor fails (or fails to serve), they typically have no rights whatever to code that may be the foundation of their business.

For example, a small hospital, with about $10M annual gross revenues, has for a decade used proprietary software to manage its financial systems. It was a mediocre but inexpensive system that satisfied its basic needs within its budget and its successful attempt to keep room rates low for its patients, who lived in the poorest county in the state.

The company offering this system was acquired by a competitor In mid-1999, the new firm notified the old system's customers that their software was not y2k compliant, would not be brought into compliance, and their own software was available to these customers at an upgrade/conversion cost of only $2.5M.

Fortunately, the clients affected joined together in a lawsuit, and the new company discovered that it would be less expensive to bring the software into y2k compliance than to fight a lawsuit.

For the clients to possess open code does not prevent this sort of unilateral abuse of their dependence by a vendor, but gives them options when it occurs.

When code is freed, the vendor may feel insecure, if it depended on lock-in to maintain non-competitive fees, failed to provide services important to the client, or forced the timing of upgrades. Whether the client or the programmer is sympathetic is not a question asked by Capitalism. Altruism is an ideal of Community...

The Programmer is free

To contribute to any free project,

To work independently, or

To change employers without changing projects.

The Client is free

To seek competitively development, training, maintenance, enhancement, and support, or

To make conversions and upgrades on a schedule that fits its own business climate.

Freedom requires all the participants in the IT relationship - programmers, clients, and vendors - to be fair with one another.

Freedom makes unjust exploitation much more difficult because the adoption or use of open source software, which mitigates its control in favor of the client. But competent vendors will actually be more secure in an open-source environment, because the secret to long-term business relationships is service and satisfaction, while lock-in itself may become a frustration from which clients are motivated to escape.

The Vendor is safer, actually, than it thinks.

The vendor has security in several ways unrelated to "lock-in." It will always have special expertise that can help maintain their present business relationships: Vendors should normally understand the needs of clients in general better than any single client; i.e., they should understand their market. The IT vendor is in a better position to offer stable and interesting work to programmers, and by serving many clients gains efficiencies of scale that permits software development which no single client could undertake. IT vendors should also understand the labor market for programmers and should be able to recruit and compensate them more effectively.

Vendors will always have a certain amount of control of clients' fate:

Completely identical distributions are impossible, for humans have an irresistible urge to customize, and each client feels it has many unique characteristics. This drive for customization creates a "special relationship" between vendor and client.

Client control reduces the likelihood of conversion and vendor change, because humans prefer to continue relationships. The frustration of lock-in drives some conversions; with Open Source, these frustrations are ameliorated because the client always has the possibility of seeking another source of service. People prefer to continue their relationships as long as they can be made to work adequately, for all the complex personal and business influences that mitigate against change. Thus there will always be powerful inertia in favor of preserving any client-vendor relationship. Change rarely occurs for trivial reasons.

Open source benefits vendors financially in that it removes the necessity for every vendor to re-invent the wheel by independently writing its entire code and database infrastructure. It is possible for competitors to cooperate on R & D where needs overlap, and shared R & D reduces development risk and capital expense while improving the quality of software available to an industry, improving productivity and financial efficiency. This savings reduces the vendor's cost exposure, or allows vendors to increase its investment in customization, usability and service - which increase customer loyalty.

When the client is free, resentment abates, and good vendor service is rewarded with loyalty. Not being able to make the client a captive may cause the vendor to feel insecure; but vendor lock is false security because the locked-in client is hoping for escape.

In addition, some clients inherently require open solutions or cannot tolerate non-control of their applications. These particularly include governments and the military.

Is There a Comparable Open Business Model Already?

Medicine is the prototypical Open-"Source" Community, and is a suitable analogy for an Open Source software business model, because at one time medical knowledge was heavily proprietary. Surgeons and physicians who made new discoveries didn't publish them (indeed, there was no way to do so), but used their special knowledge to become rich and famous. Mass printing didn't develop until the 18th century, and medical knowledge was not widely published until medical journals were established in the 19th. The public media has taken consistent interest in medical discoveries only since about the 1980's. In the 1950's, for example, the American Medical Association began a magazine on health for the public precisely because it could not interest the media in doing so.

Have you noticed that doctors have become impoverished by sharing knowledge? No, what has happened is that publication of discoveries, and subjecting them to verification by the scientific method, has steadily refined the quality of clinical medicine.

Consider Law, as well. As Michael Tiemann, CEO of Cygnus Solutions, the first Open Source firm, says, "Common Law is legal code that is free to all people equally." The law must be open, because it applies to everyone. We would live a Kafka-esque nightmare if some laws were knowable only by the enforcers. In fact, the plain fact of the complexity of our laws and regulations requires that businesses employ lawyers in order to keep this nightmare at bay. Whether or not this is good, to the non-technical user, computer applications are even more complex and mysterious than the law, and there is a similar sense of need for expert counsel.

In fact, all the professions require, in some form, a common knowledge base. Thus the business model for open source software is the professional services model.

There are several professional services occupations: not only medicine, and law, but also accounting, finance, and any area requiring expertise. The features of these professions are:

A common body of public knowledge;

Each is difficult to master (jargon must be learned, experience and judgment acquired)

Acquiring expertise requires time & money.

This (plus licensing) creates scarcity.

Competition is based on quality and satisfaction, and

Personnel management is very different from engineering (intellectual-property) firms.

Though medicine, law, accounting, and finance all operate with bodies of open knowledge, individuals differ in their mastery of this knowledge and in the skill and wisdom with which they use it. These differences generate specialization and competition. It is the barriers to excellence that keep salaries up, not exclusive knowledge.

Engineers remain serfs because, although they have a common body of knowledge, there is no model of open-ness in engineering.

Bob Young of Red Hat, Inc. has written an interesting essay on the open source business model from his perspective as CEO of the most successful open source startup, in Open Sources: Voices from the Open Source Revolution, O'Reilly, 1999. He emphasizes that commercially open source software is a commodity product like automobiles or food, in which a company success through branding.

I do not disagree with his analysis; I am emphasizing that the inexpert, the non-technical "end-user," needs service, from trained professionals, and thus something that is technologically a commodity for the expert has for the user all the characteristics of the professional services model.

Open Source is not made that way by taking on the name or by publishing the code on the net. Open source is created by observing a set of release and licensing criteria and by following a set of practices which enhance community development. And if an Open Source development effort is to see widespread use by non-technical users, it must be made physically, intellectually, and technologically accessible to them.

I: Release and licensing criteria: These are most clearly spelled out in the Ten Ideals of OpenSource.org. These criteria do not make a project successful, but are essential to its functionality.

II: Functional criteria. Open projects will be most successful and productive if they are designed to function using open source best practices.

III: Productization criteria: The project's proposal should include a feasible plan for productization and deployment.

Let's look at these three criteria in detail.

I. Release and licensing criteria. These are the Ten Ideals of OpenSource.org:

1. Unrestricted Redistribution is required, May be either gratis or for fee

2. Source Code must be provided, and must be in the programmers' preferred form for easy modification, not obfuscated.

3. Modifications must be redistributable, under the same terms as the original code.

4. Integrity of author's code protected: An open-source license must guarantee that source be readily available, but may require that it be distributed as pristine base sources plus patches. It also may prohibit use of the original brand with modified code.

5. No Discrimination Against Persons or Groups.

6. No Discrimination Against Fields of Endeavor.

7. No additional licenses or non-disclosure agreements permitted.

8. The License must not be specific to a product, that is, it must be permissible to extract the program from its context.

9. The License must not restrict other software: e.g., the license must not insist that all programs distributed together must be open-source software.

10. The License must be technology-neutral: no provision of the license may be predicated on any individual technology or style of interface,such as "click-wrap."

II. Functional criteria.

The open source community has thrived not merely because it releases code, but because there is a protocol for development. To be effective as open source, a project must be designed to enhance participation by developers and users. A productive Open Source project will:

1. Provide code freely.

2. The code must be written:

A) To be self-documenting: The code must within itself contain internal documentation that makes it understandable by any programmer having knowledge of the language in which it is written.

B) To be modular: The program must be written in modules that are each small enough for a new programmer to comprehend, that are independent enough to enhance debugging,and must accept pluggable modules that enhance or extend the functions of the base code.

3. A scheme and site for concurrent documentation and versioning must be provided.

4. A form for discussion and debate - a discussion group - must be provided.

5. Leadership. The project must define its leadership and mode of decision-making. It should also indicate how new participants will be identified as acceptable (how trust will be communicated to participants), and outline a strategy for handling necessary or unexpected leadership changes.

6. Maintenance. What will be the project's strategy for maintaining the code base, for protecting stable code, and for identifying discrete releases?

7. Packaging and distribution. Who will be responsible for producing installable code and an installation program?

III. Productization.

I don't mean to imply that open source software must be sold or made into an object in order to be true-blue Open Source. What I mean is that a project will be most successful if its team keeps its software in a "productized" form that new participants can easily install for use or development. Doing this is crucial if development is to yield an identifiable benefit for anyone besides the developers. Thus, it's important, if the project is to have legs, to have a feasible plan for deploying the resulting software to its target audience.

Ultimately, when a business model emerges from a successful open source development project, "productization" will include plans for professional commercial distribution, training, and support.

User Participation.

Any open project will be most successful if it attracts and encourages user participation in reporting functionality and support issues. This is typically achieved by creating infrastructure for interchange, such as a web site, a "bugzilla," and a discussion forum for users, ideally moderated if end-users are to be included.

Ultimately, "User-developed" applications and systems -- that is, those that are developed with the continual participation of the end user -- will have the best ergonomics. Programmers and end-users need to work together. The great strength of free and open source is that the programmer is not insulated from users by middle management and marketing types; the user is not prevented from discussing needs with the programmer by a supervisory hierarchy bent on protecting its discretionary territory.

Thus, if any industry is to have effective open development, users must participate in developing applications that meet their shared needs. The world is filled with the wreckage of carefully-designed systems that were created without the participation of the intended users; and some terrible programming design has resulted in successful projects because the user ergonomics were satisfying.

Open Source development is the best mechanism for collaboration of users and programmers.

Before we talk about the emergence of programming as a "profession" I suppose it would be kind of me to tell you what I mean. In case it hasn't yet been clear, I am proposing that programming expertise is becoming of substantial importance to society comparable to the other traditional professions, medicine, law, and accounting, and that open source code and procedures will permit programmers to offer themselves, solo or in firms, to customers who need their expertise, in ways that are analogous to medicine in particular.

By "professional" I mean a expert trained in the mastery and use of a common body of knowledge which the ordinary person is not expected to master. I am not referring to vernacular euphemistic uses of "professional" in which it's applied to athletes who are paid for performing, or derogatory uses such as the "oldest profession" even though some individuals may at times seem to deserve it.

There are at least three requirements for the existence of a profession:

- A body of publicly available knowledge that is important to a significant part of society but which the ordinary person can't be expected to master. This creates the need for experts and public availability permits the possibility of accessible training.

- A class of masters who have studied this body of knowledge and have acquired expertise in its use.

- An expected benefit from professional services, that warrants payment substantial enough to encourage people to enter the profession.

The traditional three professions have a finely-grained structure of varying degrees of specialization and different levels of expertise. The term, "programmer" has no more specificity than "health care worker." The nurse aide is valuable, and in some roles indispensable, but is no more highly trained than the person who has learned to throw together simple web pages -- yet this person may be called a programmer. And at the other end of the spectrum we have specialists such as cardiothoracic surgeons, who have focused on excellence in a very narrow groove, or generalists such as family physicians.

The programming profession has its own such specialists, experts who understand operating systems, database, particular languages, and so on. These are not as well understood by the public, partly because they are emerging; partly because they haven't formed groups such as the American Kernel-Hackers' Society and held press conferences to explain to the Times and the Post just how indispensable they are to the smooth functioning of Western Society, or created specialty certification, such as my own Board Certification in Internal Medicine and Fellowship in The American College of Physicians.

There are obvious differences between the traditional professions and programming. State or federal licensing and regulation is required relative to whether harm can be done by the professional. We have not yet seen a need for licensing or regulation of programmers, and I hope we will see none. But as professionalism emerges, we will need formal ways of quickly identifying the type and area of expertise of programmers. And as languages and technology continue to emerge quickly, this must be rather fluid.

Let's consider some of the fine-grained elements that will form the basis of professionalism.

What Must Evolve?

1: Some of the requirements are already in place. These extant features include:

- A Free Knowledge Base (software)

- Communications Technology (Internet)

- Rights to release (copyleft, etc.)

- Common needs (developer/user communities)

- Common practices among groups for

o Debate (discussion groups)

>

o Development (programming techniques)

> >

+ Self-documenting, modular code

>

o Documentation (CVS's)

- User awareness and participation (sort of)

- Commercial open source applications (coming along)

These features are the "brick" from which the "wall" of "best practices" that has evolved, through which the "magic" of free and open source happens, which if followed allow motivated and skilled workers to achieve excellent results while working at great distances from each other.

These "best practices" require all the connectivity of the Internet, plus, modular, self-documenting code, concurrent documentation, active "discussion" (debating) groups, with leadership and organization.

But is merely an intellectual exercise to develop standards. It is necessary to write working code concomitantly.

What Else Must Evolve?

2: Some of the requirements are forming. Formal training has always been available in universities; formal specialty training is lacking (whether it's appropriate, I don't know). Certification efforts are beginning, though none has the rigor of, for example, board certification in a medical specialty. Those that exist are more analogous to the various minor certifications in nursing or in financial sectors, such as those offered by Microsoft or Sun, rather than career-molding certifications.

As the programming profession gains structure and form, employment markets are likely to become more sophisticated. Partly this requires education of the lay administrators who are assigned hiring duties. It's easy to say markets will evolve; it's harder to envision the directions they'll take. A profession inevitably will evolve standards of training, certification, and possibly regulation. Employment markets develop once clients accept this professional identity. I believe that there's no sign of need for regulation of IT professional licensing: there's little fraud, as in a meritocracy like IT, false expertise is quickly apparent, and programming does not intrinsically involve life or health even though certain applications might.

There seems to be a need to restructure professional training, as noted in an eloquent essay by Dan Kegel. Several certification programs for Linux exist. Some are vendor-dependent, others are sponsored by vendors or by "training institutes." The vendor-specific program reputed to have the highest standards is Red Hat's. Vendor-independent certifications include those of the Linux Professional Institute and Sair, which in July, 2002, was transferred to the Linux Professional Group. Training institutes also claim certification, such as HOTT.

Acceptance among clients is what ultimately gives traction to certification; I don't know how this is progressing. Experts are always needed -- but the important question is, Are they recognized?)

Employment markets are beginning to evolve; I think these are still informal, such as Mojolin or ITmoonlighter.

Natural arenas for Open Source

There are two chief issues that create a need for open source.

One is a need to share protocols or data structures. Healthcare fits this form.

The other is the need to maintain control over platforms, security, applications, or protocols. Governments and military fit this form.

The purpose of education is to disseminate knowledge! There was a reason that I used the town-gown metaphor to describe the contest between free and proprietary software. Free software originated from the academic community, which thrives only when knowledge is free. The term, "open" source is in some sense a betrayal of educational values and a sop to half-hearted acceptance of what in the beginning was truly free, that is, liberated, software. But it is even more a betrayal of educational values for academicians to use proprietary systems and software when adequate free or open systems are available.

Even more importantly, the highest quality IT education occurs when students can actually use code that is in current use. Free and open source makes this possible.

Because of the commonalities of medical practice from one location and country to another, the adoption of free and open software for the data infrastructure of electronic records would greatly improve the quality of clinical communication between healthcare workers responsible for any particular patient. There is every reason to expect this to be feasible: There is a common heuristic, Western Medicine. There is need for extensive data interchange. There has been extensive work on standards.

But there has been a lack of open-source standards implementations in medicine. Since 1998, there has been some progress, but the effort has not caught the attention of vendors as it must eventually.

Fundamentally, within governments, there are three important pressures:

First, the military must control its applications. It cannot afford at any time to be the hostage of a private company, especially one that is under the flag of another nation.

Second, a government in the long run cannot afford politically to be controlled by a segment of its own business community. Never mind that businesses have always jockeyed for such control, for either business or political gain; if such control is achieved, this is in the long run dangerous both to the government and the firm.

Third, if a government adopts the protocols or file formats of a single vendor, this by default requires any subjects or firms who do business with it (everyone, at some point) to purchase that firm's software. This has been clearest in the widespread use in the US federal government of the Microsoft Word *.doc format for nearly all documents. This was done despite the fact that the specific fonts and formats used in most documents are unimportant to their contact or function, and Word has always supported ASCII text and the proprietary but open .rtf format.

This has been mitigated, as the Internet has become widely available, by the increasing use of formats that can be directly viewed in common browsers, either using Adobe's proprietary open *.pdf format or formats that are directly supported, particularly html.

The use of these open formats has been driven, however, not by an understanding of the unfair advantage that using .doc format provides Microsoft commercially, but by the desire of users for convenient viewing.

Open Source doesn't own consultants or have an RFP process. If you don't know how the government purchases IT services, this may seem off topic to you. But it is a crucial hindrance to adoption of free and open source software by the government, because, except for those agencies that have their own programming staffs, there has evolved a slow, expensive ritual dance: First the agency "develops" a software need, sometimes with the help of "expert consultants." Then, it uses consultants to seek from available vendors an application or system or a development process to meet this need. Vendors compete for acceptance by responding formally (and expensively) to the agency's Request For Proposal notice.

There are many pigs at this trough, but the greatest functional deficiency is that -- once again -- the workers who actually use the system are isolated from the programmers who end up doing the development not only by layers of supervision but also by the consultants and both agency and vendor are often frozen by the RFP.

Efforts are being made to reform this process, to permit consideration of open source software by governments, for example, in Texas.

Internationally, many governments are exploring open source software, for example as reported in Microsoft's December 31, 2002, 10-Q filing with the US Securities and Exchange Commission. The Free/Libre/Open Source Software (FLOSS) study, from the University of Maastricht's International Institute of Infonomics, recommended that governments require the use of open-source software

And in the Efficient World, license fees are simply not affordable; there is no legal alternative to open source software.

Reducing the military to its essence, one "constant" is that the military must control its mission-critical functions. The only way to have complete control of mission-critical software is to own the code (whether or not this code is obtained on the open market). In peacetime, the military is often slack about control, but all functions are mission critical in war.

Highly reliable proprietary closed systems are of course acceptable if the vendors are subjects of the government which the military serves, but closed systems from foreign vendors are a risk.

And unreliable closed systems are simply dangerous to military missions. Remember the Yorktown, a Navy "Smart Ship" running Windows NT that had several system failures in 1998. The article I've cited is one of many; this particular one has the greatest "face validity." The MITRE Corporation has published a study of the Use of Free and Open Software (FOSS) in the U.S. Department of Defense, a 152-page, 1.4MB pdf document that lays out reasons for its use. This is a well organized and clearly written document that's well worth a look.

Healthcare, in particular, has an enormous and unfulfilled need to produce exchangeable documents as patients pass from one specialist to another and from clinic to hospital and nursing home settings of care. Portability of records also is important to patients who move from one location to another.

Yet right now, despite the years of effort devoted to HL-7, it is essentially impossible for a patient's clinical record to follow the patient electronically from one institution or practice to another. This greatly hinders good quality care, as physicians cannot respond to information about past or recent health events that are hidden from them behind the barrier of non-communicability.

The portability of the electronic medical record is of great importance to avoiding medical errors of omission, but no significant success has been experienced in achieving portability. The HL-7 effort has involved enormous effort, but long ago passed a manageable size and has not included the pluggable software modules that should have accompanied its development.

Healthcare software vendors have neglected this need, and instead each has been guilty of merely developing proprietary tools and protocols in the vain hope of eventually dominating and controlling this market, each in the hope of being the single solution to electronic medical records.

Medical administrators, skilled and altruistic though they are, have utterly failed to understand the need for portability or to work effectively toward this goal.

Even the one organization that should have naturally produced electronically sharable records, the federal government, initially neglected this need. A well-designed, functional, comprehensive medical record system -- VistA -- was gradually created by programmers and users in the Veterans Administration Hospital system. This work was adopted by the Department of Defense and by the Indian Health Service. But did these agencies work together to ensure that records could follow patients? No. Not only cannot the records of servicemen follow them from the military (DOD) to the VA system, but as VistA was adopted at various bases, no provision was made to send records electronically from one base to another! This is a failure of management, not programming.

I should step off the soapbox and note that the VA did recognize the need for portability in the 1990's and put in place several mechanisms such as the Patient Data Exchange module and the Master Patient Index (MPI) so that the system now has that capability. And the DOD is working toward portability, plus there is now a presidential mandate to bring the DOD and VA systems together so that service medical records are available for veteran care. The VISTA Monograph collection describes the systems that VistA comprises. The HardHats have published a very interesting history of VistA. (Thanks to Peter J. Groen of the VA for correcting me on certain VistA points.)

Do you doubt the general need for electronic records portability? Listen to a typical story, which is a composite to protect confidentiality.

Mary McLain was 48 years old, and had had diabetes for 20 years when one night at about 3 am she began having trouble breathing. Her doctor happened not to be available then, and the hospital emergency room had no access to her doctor's records. Mary did not exactly remember what medications she was taking, and in her fright and distraction didn't think to bring her medications -- and didn't think she really had time to pack them all up -- the ones she took at bedtime were in her bedroom next to her toothbrush, the ones she took at breakfast were in the kitchen, and the ones she took at work were in her purse. She hadn't memorized or written down the exact names and doses -- the names were too complicated to read anyway; how could anybody remember those?

Mary didn't remember everything her doctor had done, such as past test and results; she only knew that her diabetes seemed pretty well controlled. When asked, "Do you smoke?" she said, "No," but didn't add that she had quit smoking 5 months before and was taking a little snuff to keep from feeling nervous and to stop the weight gain. The ER doctor heard wheezing, and gave her an asthma treatment, the wheezing improved, and they sent her home. Because they didn't have access to any clinical notes, they didn't know that she'd never had asthma, was an ex-smoker, and that her doctor had done a cardiac stress test a year before with suspicious results. Actually, she was having a heart problem while she was being treated for asthma.

Mary saw her doctor the next week, who figured this out because all the key clues were in her chart, and referred her to a cardiologist. He sent copies of her records, but they were still in somewhere in transition when she actually saw the cardiologist. The cardiologist repeated several hundred dollars worth of tests that would have been in this packet, in order to be able to give Mary some answers while she was still with him.

The cardiologist referred Mary to a lung specialist to better define her smoking-related lung disease. This pulmologist was located in another clinic some miles away, and when Mary saw him, the clinical notes from the cardiologist were available, but none of the records from her own doctor (who did not even know that this referral had been undertaken). Mary of course assumed that the pulmologist would send her test results back to her own doctor, but the cardiologist had made the referral, and thus her regular doctor and the pulmologist didn't know each other existed.

And so, when Mary saw her own doctor, it was impossible to follow the unknown recommendations of the pulmologist. But her most recent mammogram results were back -- and abnormal -- and off Mary went to see a surgeon -- in another clinic in town -- about the abnormal spot in her left breast that probably was OK but should be biopsied to make sure.